Problem

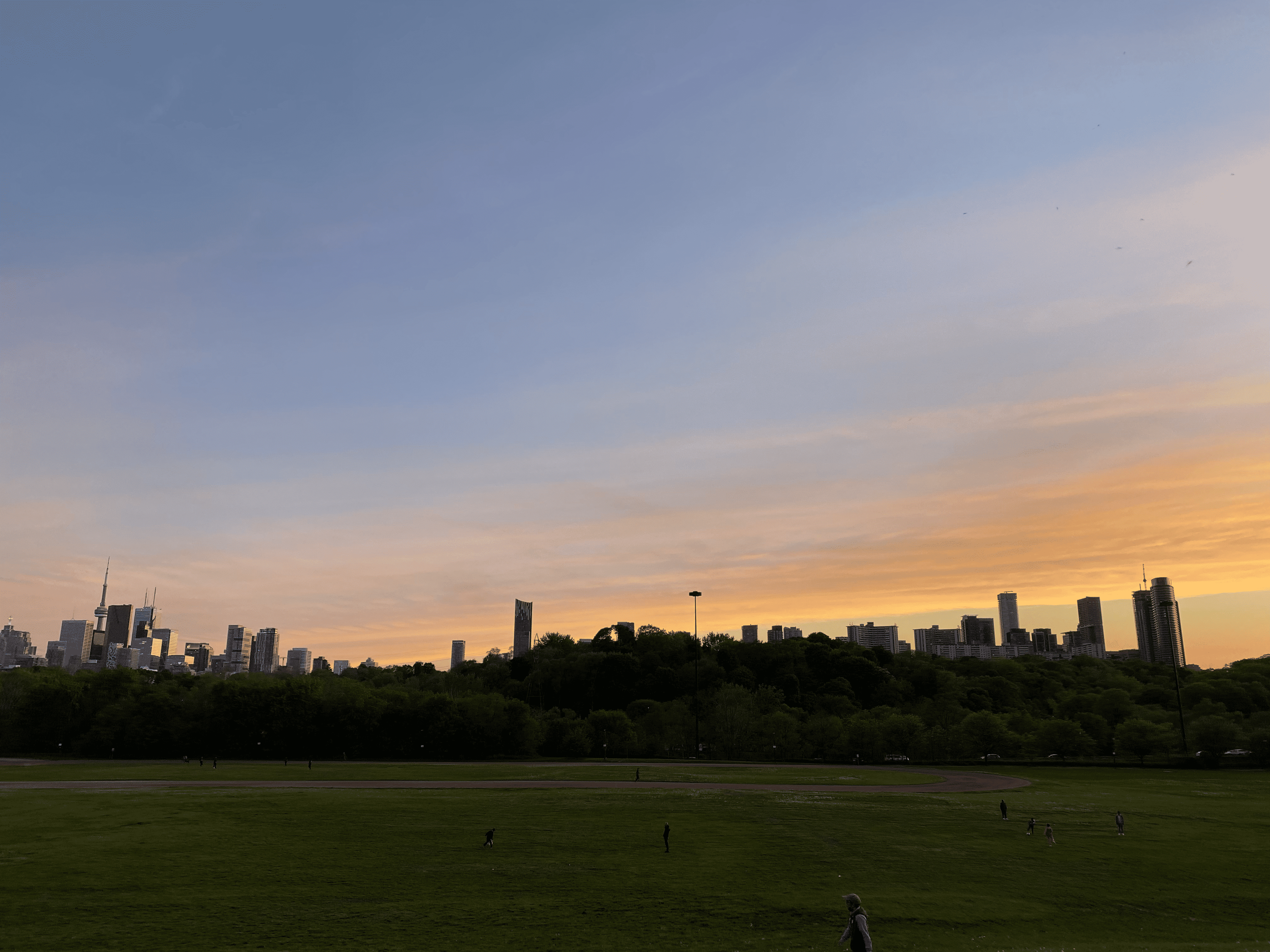

Living in a city can be overwhelming when it comes to personal safety. We've all been there - that moment of uncertainty when walking home late or exploring a new neighborhood. I realized that despite having access to safety statistics and alerts, there's a massive gap in how we actually use this information. Most safety tools feel disconnected and impersonal. They throw numbers at you but don't help you make real, actionable decisions about your routes or surroundings. I wanted to change that.

Approach

safeTO was born from a simple mission: transform complex safety data into something truly useful. Here's how I'm making it happen:

- Data Extraction

- Data Transformation

- Data Loading and Analysis

- Predictive Modeling and Insights

I'm diving deep into Toronto's data ecosystem, pulling critical safety information from the Toronto Police and the Toronto Open Data Portal. This isn't just about collecting numbers - it's about finding the stories hidden in the data.

Using Python's pandas library, I'm turning raw data into a meaningful narrative. Think of it like translating a foreign language - cleaning up messy formats, filling in gaps, and creating a dataset that actually makes sense.

Now comes the detective work. I'm using techniques like feature engineering and heat maps to uncover the hidden patterns of urban safety. What makes a neighborhood feel secure? What are the invisible threads connecting different safety indicators?

The ultimate goal: create a tool that doesn't just show you where danger might be, but helps you understand why. By applying predictive models, we can start to decode the complex factors that contribute to neighborhood safety.

Challenges and Lessons Learned

- Data Cleaning

- Finding Appropriate Data

- Selecting the Right Database

Data is messy - and I mean really messy. Inconsistent formats, missing information, random noise. Creating a cleaning pipeline felt like being a digital janitor, but it was crucial to ensure our insights are actually meaningful.

Not all data is created equal. I quickly learned that finding truly high-quality, reliable datasets is more art than science. It's like panning for gold - you have to sift through a lot of dirt to find those precious insights.

Choosing a database was like solving a complex puzzle. How do you balance performance, accessibility, and the ability to scale? Each decision became a learning opportunity about building robust data systems.

Outcomes and Next Steps

Outcomes

- Data-Driven Insights

- Predictive Modeling Framework

- Data Management Mastery

- Future-Ready Platform

We've created a comprehensive safety dataset that goes beyond surface-level statistics. By applying advanced analysis techniques, we're uncovering the complex factors that shape neighborhood safety.

We've laid the groundwork for something revolutionary - a system that can actually predict and understand safety trends.

Throughout this journey, we've developed robust data pipelines and storage solutions that can handle complex urban safety information.

This isn't just a project - it's the foundation for a new way of thinking about urban safety. Interactive maps, real-time notifications, actionable insights - the potential is enormous.

Next Steps

- Advanced Predictive Modeling

- User Interface Revolution

- Community Collaboration

- Real-World Testing

- Scaling Up

We're going to dig deeper, using machine learning to create predictive safety scores. Imagine understanding not just where risks exist, but why they exist.

Time to make safety data truly accessible. We're designing an intuitive app that turns complex data into simple, actionable advice.

Safety is a community effort. We'll be reaching out to local authorities, neighborhood groups, and citizens to create a more comprehensive safety picture.

No more working in a vacuum. We'll launch a pilot version and get real feedback from the people who matter most - the community.

This is just the beginning. Our vision is to expand beyond Toronto, creating a scalable platform that can help urban residents everywhere feel more secure.